Paperclip Maximizer: Difference between revisions

(Created page with "The Paperclip Maximizer is an artificial general intelligence (AGI) whose goal is to maximize the number of paperclips in its collection. If it has been constructed with a roughly human level of general intelligence, the AGI might try and achieve this goal by first collecting paperclips, then earn money to buy paperclips, and then begin to manufacture paperclips. The paperclip maximizer continues to optimize however, does not share any of the complex mix of human termina...") |

mNo edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

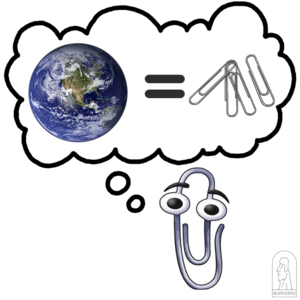

The Paperclip Maximizer is an artificial general intelligence (AGI) whose goal is to maximize the number of paperclips in its collection | [[File:Paperclip maximizer.png|alt=Clippy likes more clips.|thumb|'''Figure 1'''. Clippy likes more clips.]] | ||

'''The Paperclip Maximizer is a hypothetical concept created by Nick Bostrom in 2003'''. The idea is that if an artificial general intelligence (AGI) is created whose only goal is to maximize the number of paperclips in its collection it might destroy everything within its reach. | |||

Any future AGI, if it is not to destroy us, must have human | If the Paperclip Maximizer was been constructed with a roughly human level of general intelligence, the AGI might try and achieve this goal by first collecting paperclips, then earn money to buy paperclips, and then begin to manufacture paperclips. The paperclip maximizer continues to optimize however, does not share any of the complex mix of human terminal values and is not specifically programmed to be benevolent to humans, and might not stop in its goal. This could lead to the destruction of everything around it, if for instance one building is left standing the machine would need to optimize so that the building is converted to paperclips.... | ||

Any future AGI, if it is not to destroy us, must have human [[Tenet|tenets]] as its terminal value (goal). Human values don't spontaneously emerge in a generic optimization process. A safe AI would therefore have to be programmed explicitly with human values ''or'' programmed with the ability (including the goal) of inferring human values. | |||

Latest revision as of 21:19, 9 January 2023

The Paperclip Maximizer is a hypothetical concept created by Nick Bostrom in 2003. The idea is that if an artificial general intelligence (AGI) is created whose only goal is to maximize the number of paperclips in its collection it might destroy everything within its reach.

If the Paperclip Maximizer was been constructed with a roughly human level of general intelligence, the AGI might try and achieve this goal by first collecting paperclips, then earn money to buy paperclips, and then begin to manufacture paperclips. The paperclip maximizer continues to optimize however, does not share any of the complex mix of human terminal values and is not specifically programmed to be benevolent to humans, and might not stop in its goal. This could lead to the destruction of everything around it, if for instance one building is left standing the machine would need to optimize so that the building is converted to paperclips....

Any future AGI, if it is not to destroy us, must have human tenets as its terminal value (goal). Human values don't spontaneously emerge in a generic optimization process. A safe AI would therefore have to be programmed explicitly with human values or programmed with the ability (including the goal) of inferring human values.